Face Detection: Haar Cascade vs. MTCNN

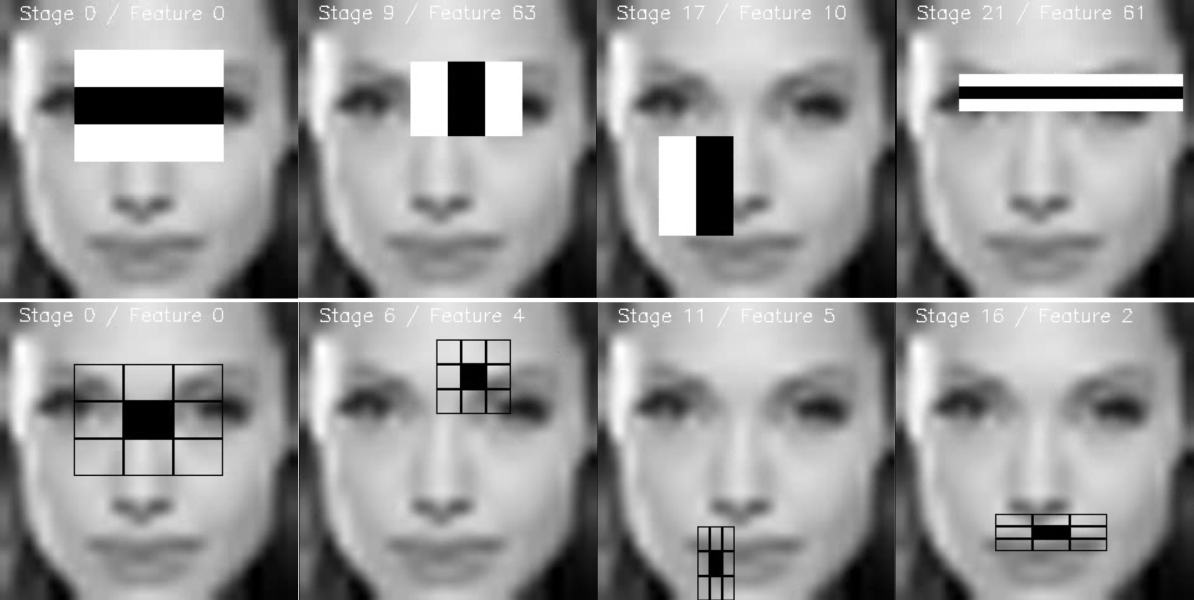

AI InsightsOne of the most important things in a face recognition system is actually detecting the faces in an image. Right? Without the faces, you can’t really do any downstream tasks of person classification, gender classification, emotion classification, and so on. If you’re a Computer Vision practitioner, you’re probably familiar with OpenCV, a python’s open-source package to perform a variety of computer vision tasks. Within OpenCV, there’s a popular face detection module, which utilizes the technique called Histogram of Oriented Gradient (HOG). You can read more about HOG here. But basically, the technique trains a cascade function (boxes of shapes) that appears in images with faces, and learns the general pattern of a face through the change in colors/shadows in the image. In the original paper, the author claims to have achieved 95% accuracy in face detection.

Now comes Deep Learning. When it comes to computer vision, Deep Learning algorithms blow away all other models in terms of accuracy. In fact, the success in using Convolutional Neural Networks (CNN) on ImageNet dataset sparked the current hype of AI. Before CNN, accuracies only improved by a few percentages each year. However, Geoffrey Hinton and his team changed the world by becoming the first team to achieve over 75% accuracy, with a 10.8% margin higher than the second best. Relative to how ImageNet accuracy improve prior to CNN’s, this result was a decade worth of advancement, all of that in one year. The success is due to the CNN’s ability to learn features from an input image, as opposed to manual feature engineering done in traditional machine learning. In Multi-Task Cascaded Convolutional Neural Network (MTCNN), face detection and face alignment are done jointly, in a multi-task training fashion. This allows the model to better detect faces that are initially not aligned.

In the process of building our Face Attributes model (see the post here), the first step was to crop the faces from a list of images. The original dataset was UTK Face. I used both the Haarcascade and the MTCNN to build the cropped faces dataset. Importantly, the UTK Face dataset contains images of only one individual, so if the face detector pulls out at least 2 faces from a single image, then we know that the detector is making a mistake (perhaps by seeing a random object as a face). Here’s how the two methods compare:

Haarcascade

- Number of images in UTK Face: 24,111

- Number of cropped faces using haarcascade: 19,915

- Total number of extra faces from a single image: 947

Recall = (19915 / 24111)*100 = 82.60%

Precision = (18968 / 19915)*100 = 95.24%

MTCNN

- Number of images in UTK Face: 24,111

- Number of cropped faces using MTCNN: 21,666

- Total number of extra faces from a single image: 428

Recall = (21666 / 24111)*100 = 89.85%

Precision = (21238/21666)*100 = 98.02%

Note that these numbers (the precisions) are mere estimates. If you want the exact numbers, you’d need to manually look at all the cropped faces and count the number of times we indeed got a face. That is quite a heavy lift, but these numbers should give you a rough idea of how the two methods compare. The advantage of using Haarcascade is that it is extremely fast. The whole process took around 954 seconds (25 images/sec), while it took roughly 2 hours for the MTCNN (3 images/sec). [I used a CPU for this. It could’ve been much faster with batched computing via GPU]. One noteworthy limitation of the haarcascade is that the output bounding box is a square, whereas the MTCNN outputs an arbitrary rectangle that covers the face. If you’re not concerned with speed, MTCNN performs way better. If speed is your concern, you could use MTCNN to crop the faces to build your dataset and train your model. Then during real-time inference use a haarcascade to crop the faces before feeding it into another model.

Contact us

Drop us a line and we will get back to you